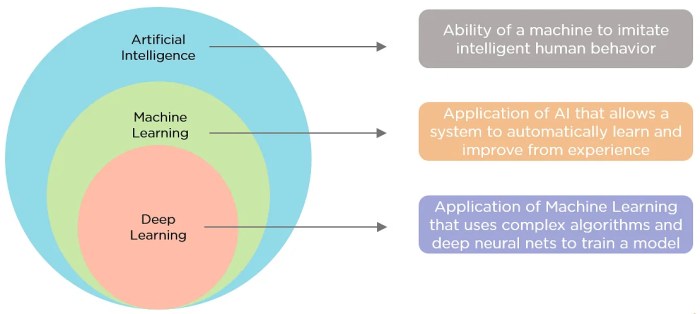

Machine Learning, at its core, is a transformative field that empowers computers to learn from data without explicit programming. This ability to analyze patterns and make predictions from vast datasets has revolutionized various industries, from healthcare to finance, enabling groundbreaking advancements and shaping the future of technology.

Machine learning encompasses a wide range of algorithms, each designed to address specific tasks. Supervised learning, for instance, trains models on labeled data to predict outcomes, while unsupervised learning uncovers hidden patterns in unlabeled data. Reinforcement learning, on the other hand, allows agents to learn through trial and error, optimizing actions based on rewards and penalties.

Model Training and Evaluation

Training a machine learning model involves feeding it a dataset of labeled examples, allowing it to learn patterns and relationships. This process is crucial for enabling the model to make accurate predictions on unseen data.

Evaluation Metrics

Evaluation metrics are essential for assessing the performance of a trained model. They provide insights into how well the model generalizes to new data.

- Accuracy: The proportion of correctly classified instances. For example, if a model correctly predicts 90 out of 100 instances, its accuracy is 90%.

- Precision: The proportion of correctly predicted positive instances out of all instances predicted as positive. For instance, if a spam detection model correctly identifies 80 out of 100 spam emails, its precision is 80%.

- Recall: The proportion of correctly predicted positive instances out of all actual positive instances. If the spam detection model correctly identifies 80 out of 100 spam emails, but there were actually 100 spam emails, its recall is 80%.

- F1-Score: The harmonic mean of precision and recall. It provides a balanced measure of model performance, considering both false positives and false negatives. For example, an F1-score of 0.8 indicates a good balance between precision and recall.

Cross-Validation

Cross-validation is a technique for evaluating model performance on unseen data. It involves dividing the dataset into multiple folds. The model is trained on a subset of the folds and evaluated on the remaining fold. This process is repeated for each fold, and the average performance across all folds is used to assess the model’s generalization ability.

Cross-validation helps to prevent overfitting, where a model performs well on the training data but poorly on unseen data.

Hyperparameter Tuning

Hyperparameters are parameters that are not learned from the data but are set before training. Tuning these hyperparameters is crucial for optimizing model performance.

- Grid Search: A method that systematically tries all combinations of hyperparameters within a specified range. This can be computationally expensive but provides a comprehensive search.

- Random Search: A method that randomly samples hyperparameters from a distribution. It can be more efficient than grid search, especially when dealing with a large number of hyperparameters.

- Bayesian Optimization: A method that uses a probabilistic model to guide the search for optimal hyperparameters. It can be more efficient than grid search and random search, particularly for complex optimization problems.

Ethical Considerations in Machine Learning

Machine learning, with its ability to analyze vast amounts of data and make predictions, has revolutionized various industries. However, its powerful capabilities also raise significant ethical concerns. As machine learning systems become increasingly prevalent in our lives, it is crucial to address these ethical considerations and ensure responsible development and deployment of these technologies.

Bias and Fairness

Bias in machine learning refers to the systematic errors or disparities in the outcomes of a model due to prejudiced data or algorithms. This can lead to unfair treatment of certain groups of people. For example, a facial recognition system trained on a dataset predominantly composed of light-skinned individuals might perform poorly on individuals with darker skin tones.

- Data Bias:The data used to train machine learning models can reflect existing societal biases. For instance, if a dataset used to train a loan approval system is skewed towards individuals with higher credit scores, the model might perpetuate existing financial inequalities.

- Algorithmic Bias:Even with unbiased data, the algorithms themselves can introduce bias. For example, a machine learning model used for hiring decisions might prioritize candidates with specific s in their resumes, inadvertently excluding qualified individuals who use different language or phrasing.

To mitigate bias and ensure fairness, it is essential to:

- Collect diverse and representative data:Ensuring that the training data reflects the diversity of the population is crucial. This can involve actively seeking out underrepresented groups and collecting data from a wide range of sources.

- Develop fair algorithms:Algorithms should be designed to minimize bias and ensure equitable outcomes. This might involve using techniques like fairness-aware learning or algorithmic auditing to identify and address potential biases.

- Regularly monitor and evaluate models:Continuously monitoring the performance of machine learning models and identifying any disparities in their outcomes can help detect and address biases.

Privacy

Machine learning often involves collecting and analyzing personal data, raising concerns about privacy. The use of personal data in machine learning systems can lead to unauthorized access, data breaches, or the creation of profiles that could be used for surveillance or discrimination.

- Data Collection:The collection of personal data for machine learning purposes should be done transparently and with the informed consent of individuals.

- Data Security:Robust security measures should be implemented to protect personal data from unauthorized access and breaches.

- Data Minimization:Only the necessary data should be collected and used for machine learning purposes. This helps minimize the potential for privacy violations.

- Data Anonymization:Techniques like data anonymization can be used to protect the privacy of individuals while still enabling the use of data for machine learning.

Transparency and Explainability

Machine learning models can be complex and difficult to understand, leading to concerns about transparency and explainability. It is essential to be able to understand how these models make decisions, especially in contexts where they have significant impact on individuals’ lives.

- Model Interpretability:Developing techniques to make machine learning models more interpretable can help understand the factors influencing their decisions.

- Auditing and Verification:Regularly auditing and verifying machine learning models can help ensure their accuracy and fairness.

- Human Oversight:Maintaining human oversight over machine learning systems can help ensure that decisions made by these systems are ethically sound and aligned with human values.

Accountability, Machine Learning

Determining who is responsible for the outcomes of machine learning systems is a critical ethical consideration. As these systems become more complex and autonomous, it is essential to establish clear lines of accountability for their actions.

- Algorithmic Transparency:Ensuring transparency in the development and deployment of machine learning algorithms can help clarify who is responsible for their actions.

- Human-in-the-Loop:Incorporating human oversight into machine learning systems can help ensure that they are used responsibly and ethically.

- Legal Frameworks:Developing legal frameworks that address the ethical implications of machine learning can help establish accountability for its use.

Table of Ethical Considerations and Solutions

| Ethical Consideration | Solution |

|---|---|

| Bias and Fairness | Collect diverse and representative data, develop fair algorithms, regularly monitor and evaluate models. |

| Privacy | Collect data transparently with informed consent, implement robust security measures, minimize data collection, anonymize data. |

| Transparency and Explainability | Develop interpretable models, audit and verify models, maintain human oversight. |

| Accountability | Ensure algorithmic transparency, incorporate human-in-the-loop, develop legal frameworks. |

Final Conclusion: Machine Learning

As machine learning continues to evolve, its potential impact on society grows exponentially. From personalized medicine to self-driving cars, the applications are endless, promising a future where technology seamlessly integrates with our lives. However, ethical considerations are paramount, ensuring responsible development and deployment of these powerful tools.

By embracing transparency, fairness, and privacy, we can harness the transformative power of machine learning for the betterment of humanity.

Q&A

What are some real-world examples of machine learning in action?

Machine learning powers various applications we encounter daily, including personalized recommendations on streaming platforms, fraud detection in financial transactions, and spam filtering in email.

What are the key challenges in implementing machine learning?

Challenges include ensuring data quality, handling large datasets, choosing the right algorithm, and addressing ethical concerns like bias and privacy.

How can I learn more about machine learning?

Numerous online courses, tutorials, and books are available to help you delve into the world of machine learning. Online platforms like Coursera, edX, and Udacity offer comprehensive programs, while books like “Hands-On Machine Learning with Scikit-Learn, Keras & TensorFlow” provide practical guidance.